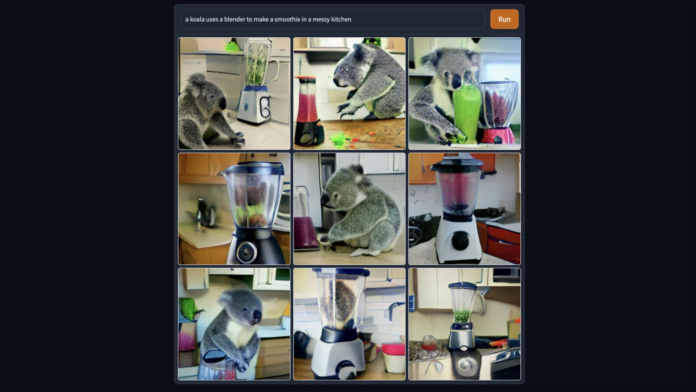

The DALL-E Mini software application from a group of open-source designers isn’t ideal, however in some cases it does successfully developed photos that match individuals’s text descriptions.

Screenshot

In scrolling through your social networks feeds lately, there’s a likelihood you have actually seen illustrations accompanied by captions. They’re popular now.

The photos you’re seeing are most likely enabled by a text-to-image program called DALL-E. Before publishing the illustrations, individuals are placing words, which are then being transformed into images through expert system designs.

For example, a Twitter user published a tweet with the text, “To be or not to be, rabbi holding avocado, marble sculpture.” The connected image, which is rather sophisticated, reveals a marble statue of a bearded male in a bathrobe and a bowler hat, understanding an avocado.

The AI designs originate from Google’s Imagen software application in addition to OpenAI, a start-up backed by Microsoft that established DALL-E 2. On its site, OpenAI calls DALL-E 2 “a new AI system that can create realistic images and art from a description in natural language.”

But the majority of what’s taking place in this location is originating from a fairly little group of individuals sharing their photos and, in many cases, producing high engagement. That’s since Google and OpenAI have actually not made the innovation broadly offered to the general public.

Many of OpenAI’s early users are good friends and family members of staff members. If you’re looking for gain access to, you need to sign up with a waiting list and show if you’re an expert artist, designer, scholastic scientist, reporter or online developer.

“We’re working hard to accelerate access, but it’s likely to take some time until we get to everyone; as of June 15 we have invited 10,217 people to try DALL-E,” OpenAI’s Joanne Jang composed on an aid page on the business’s site.

One system that is openly offered is DALL-EMini it makes use of open-source code from a loosely arranged group of designers and is typically strained with need. Attempts to utilize it can be welcomed with a dialog box that states “Too much traffic, please try again.”

It’s a bit similar to Google’s Gmail service, which tempted individuals with unrestricted e-mail storage area in2004 Early adopters might get in by invite just in the beginning, leaving millions to wait. Now Gmail is among the most popular e-mail services on the planet.

Creating images out of text might never ever be as common as e-mail. But the innovation is definitely having a minute, and part of its appeal remains in the exclusivity.

Private research study laboratory Midjourney needs individuals to submit a kind if they want to explore its image-generation bot from a channel on the Discord chat app. Only a choose group of individuals are utilizing Imagen and publishing photos from it.

The text-to-picture services are advanced, determining the most vital parts of a user’s triggers and after that thinking the very best method to highlight those terms. Google trained its Imagen design with numerous its internal AI chips on 460 million internal image-text sets, in addition to outdoors information.

The user interfaces are easy. There’s normally a text box, a button to begin the generation procedure and a location listed below to show images. To show the source, Google and OpenAI include watermarks in the bottom ideal corner of images from DALL-E 2 and Imagen.

The business and groups developing the software application are justifiably worried about having everybody storming evictions at the same time. Handling web demands to carry out questions with these AI designs can get pricey. More significantly, the designs aren’t ideal and do not constantly produce outcomes that precisely represent the world.

Engineers trained the designs on substantial collections of words and photos from the web, consisting of pictures individuals published on Flickr.

OpenAI, which is based in San Francisco, acknowledges the capacity for damage that might originate from a design that found out how to make images by basically searching the web. To attempt and attend to the threat, staff members got rid of violent material from training information, and there are filters that stop DALL-E 2 from producing images if users send triggers that may breach business policy versus nudity, violence, conspiracies or political material.

“There’s an ongoing process of improving the safety of these systems,” stated Prafulla Dhariwal, an OpenAI research study researcher.

Biases in the outcomes are likewise crucial to comprehend, and represent a more comprehensive issue for AI. Boris Dayma, a designer from Texas, and others who dealt with DALL-E Mini defined the issue in a description of their software application.

“Occupations demonstrating higher levels of education (such as engineers, doctors or scientists) or high physical labor (such as in the construction industry) are mostly represented by white men,” they composed. “In contrast, nurses, secretaries or assistants are typically women, often white as well.”

Google explained comparable drawbacks of its Imagen design in a scholastic paper.

Despite the dangers, OpenAI is delighted about the kinds of things that the innovation can make it possible for. Dhariwal stated it might open imaginative chances for people and might aid with industrial applications for interior decoration or dressing up sites.

Results must continue to enhance with time. DALL-E 2, which was presented in April, spits out more practical images than the preliminary variation that OpenAI revealed in 2015, and the business’s text-generation design, GPT, has actually ended up being more advanced with each generation.

“You can expect that to happen for a lot of these systems,” Dhariwal stated.

SEE: FormerPres Obama handles disinformation, states it might become worse with AI