< period class ="glossaryLink" aria-describedby ="tt" data-cmtooltip ="<div class=glossaryItemTitle>MIT</div><div class=glossaryItemBody>MIT is an acronym for the Massachusetts Institute of Technology. It is a prestigious private research university in Cambridge, Massachusetts that was founded in 1861. It is organized into five Schools: architecture and planning; engineering; humanities, arts, and social sciences; management; and science. MIT's impact includes many scientific breakthroughs and technological advances. Their stated goal is to make a better world through education, research, and innovation.</div>" data-gt-translate-attributes="[{" attribute="">MIT researchers unveil the first open-source simulation engine capable of constructing realistic environments for deployable training and testing of autonomous vehicles.

Since they’ve proven to be productive test beds for safely trying out dangerous driving scenarios, hyper-realistic virtual worlds have been heralded as the best driving schools for autonomous vehicles (AVs). Tesla, Waymo, and other self-driving companies all rely heavily on data to enable expensive and proprietary photorealistic simulators, because testing and gathering nuanced I-almost-crashed data usually isn’t the easiest or most desirable to recreate.

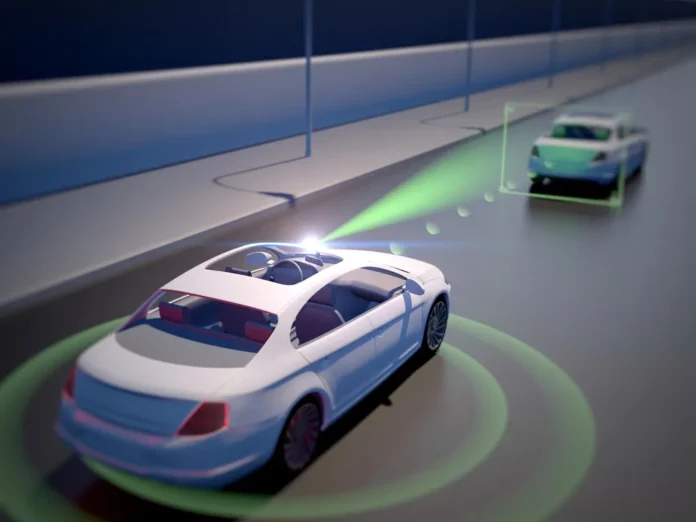

VISTA 2.0 is an open-source simulation engine that can make realistic environments for training and testing self-driving cars. Credit: Image courtesy of MIT CSAIL

With this in mind, scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) created “VISTA 2.0,” a data-driven simulation engine where vehicles can learn to drive in the real world and recover from near-crash scenarios. What’s more, all of the code is being released open-source to the public.

“Today, only companies have software like the type of simulation environments and capabilities of VISTA 2.0, and this software is proprietary. With this release, the research community will have access to a powerful new tool for accelerating the research and development of adaptive robust control for autonomous driving,” says the senior author of a paper about the research, MIT Professor and CSAIL Director Daniela Rus.

VISTA is a data-driven, photorealistic simulator for self-governing driving. It can imitate not simply live video however LiDAR information and occasion cams, and likewise integrate other simulated cars to design complex driving circumstances. VISTA is open source and the code can be discovered listed below.

VISTA 2.0, which constructs off of the group’s previous design, VISTA, is basically various from existing AV simulators considering that it’s data-driven. This indicates it was constructed and photorealistically rendered from real-world information– thus making it possible for direct transfer to truth. While the preliminary model just supported single vehicle lane-following with one video camera sensing unit, attaining high-fidelity data-driven simulation needed reassessing the structures of how various sensing units and behavioral interactions can be manufactured.

Enter VISTA 2.0: a data-driven system that can imitate complicated sensing unit types and enormously interactive situations and crossways at scale. Using much less information than previous designs, the group had the ability to train self-governing cars that might be significantly more robust than those trained on big quantities of real-world information.

“This is a massive jump in capabilities of data-driven simulation for autonomous vehicles, as well as the increase of scale and ability to handle greater driving complexity,” states Alexander Amini, CSAIL PhD trainee and co-lead author on 2 brand-new documents, together with fellow PhD trainee Tsun-HsuanWang “VISTA 2.0 demonstrates the ability to simulate sensor data far beyond 2D RGB cameras, but also extremely high dimensional 3D lidars with millions of points, irregularly timed event-based cameras, and even interactive and dynamic scenarios with other vehicles as well.”

The group of researchers had the ability to scale the intricacy of the interactive driving jobs for things like surpassing, following, and negotiating, consisting of multiagent situations in extremely photorealistic environments.

Because the majority of our information (luckily) is simply ordinary, everyday driving, training AI designs for self-governing cars includes hard-to-secure fodder of various ranges of edge cases and unusual, hazardous situations. Logically, we can’t simply crash into other cars and trucks simply to teach a neural network how to not crash into other cars and trucks.

Recently, there’s been a shift far from more traditional, human-designed simulation environments to those developed from real-world information. The latter have enormous photorealism, however the previous can quickly design virtual cams and lidars. With this paradigm shift, a crucial concern has emerged: Can the richness and intricacy of all of the sensing units that self-governing cars require, such as lidar and event-based cams that are more sporadic, properly be manufactured?

Lidar sensing unit information is much more difficult to translate in a data-driven world– you’re successfully attempting to produce new 3D point clouds with countless points, just from sporadic views of the world. To manufacture 3D lidar point clouds, the scientists utilized the information that the vehicle gathered, forecasted it into a 3D area originating from the lidar information, and after that let a brand-new virtual automobile drive around in your area from where that initial automobile was. Finally, they forecasted all of that sensory details back into the frame of view of this brand-new virtual automobile, with the assistance of neural networks.

Together with the simulation of event-based cams, which run at speeds higher than countless occasions per 2nd, the simulator can not just replicating this multimodal details however likewise doing so all in real-time. This makes it possible to train neural internet offline, however likewise test online on the vehicle in increased truth setups for safe assessments. “The question of if multisensor simulation at this scale of complexity and photorealism was possible in the realm of data-driven simulation was very much an open question,” states Amini.

With that, the driving school ends up being a celebration. In the simulation, you can walk around, have various kinds of controllers, imitate various kinds of occasions, develop interactive situations, and simply drop in brand name brand-new cars that weren’t even in the initial information. They evaluated for lane following, lane turning, vehicle following, and more dicey situations like fixed and vibrant surpassing (seeing barriers and moving so you do not clash). With the multi-agency, both genuine and simulated representatives engage, and brand-new representatives can be dropped into the scene and managed any which method.

Taking their major vehicle out into the “wild”– a.k.a. Devens, Massachusetts– the group saw instant transferability of outcomes, with both failures and successes. They were likewise able to show the bodacious, magic word of self-driving vehicle designs: “robust.” They revealed that AVs, trained completely in VISTA 2.0, were so robust in the real life that they might manage that evasive tail of difficult failures.

Now, one guardrail people depend on that can’t yet be simulated is human feeling. It’s the friendly wave, nod, or blinker switch of recommendation, which are the kind of subtleties the group wishes to carry out in future work.

“The central algorithm of this research is how we can take a dataset and build a completely synthetic world for learning and autonomy,” statesAmini “It’s a platform that I believe one day could extend in many different axes across robotics. Not just autonomous driving, but many areas that rely on vision and complex behaviors. We’re excited to release VISTA 2.0 to help enable the community to collect their own datasets and convert them into virtual worlds where they can directly simulate their own virtual autonomous vehicles, drive around these virtual terrains, train autonomous vehicles in these worlds, and then can directly transfer them to full-sized, real self-driving cars.”

Reference: “VISTA 2.0: An Open, Data-driven Simulator for Multimodal Sensing and Policy Learning for Autonomous Vehicles” by Alexander Amini, Tsun-Hsuan Wang, Igor Gilitschenski, Wilko Schwarting, Zhijian Liu, Song Han, Sertac Karaman and Daniela Rus, 23 November 2021, Computer Science > > Robotics

arXiv: 2111.12083

Amini and Wang composed the paper along with Zhijian Liu, MIT CSAIL PhD trainee; Igor Gilitschenski, assistant teacher in computer technology at the University of Toronto; Wilko Schwarting, AI research study researcher and MIT CSAIL PhD ’20; Song Han, associate teacher at MIT’s Department of Electrical Engineering and Computer Science; Sertac Karaman, associate teacher of aeronautics and astronautics at MIT; and Daniela Rus, MIT teacher and CSAIL director. The scientists provided the work at the IEEE International Conference on Robotics and Automation (ICRA) in Philadelphia.

This work was supported by the National Science Foundation and Toyota ResearchInstitute The group acknowledges the assistance of NVIDIA with the contribution of the Drive AGX Pegasus.