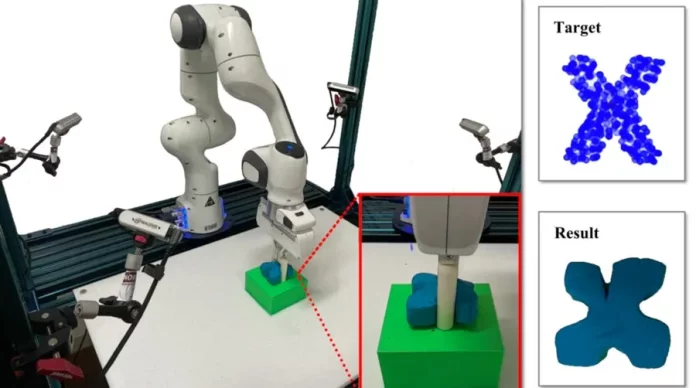

Researchers control elasto-plastic items into target shapes from visual hints. Credit: MIT CSAIL

Robots control soft, deformable product into different shapes from visual inputs in a brand-new system that might one day allow much better house assistants.

Many people feel a frustrating sense of delight from our inner kid when coming across a stack of the fluorescent, rubbery mix of water, salt, and flour that put goo on the map: play dough. (Even if this seldom takes place in their adult years.)

While controling play dough is enjoyable and simple for 2-year-olds, the shapeless sludge is rather hard for robotics to deal with. With stiff items, makers have actually ended up being progressively trusted, however controling soft, deformable items includes a shopping list of technical difficulties. One of the secrets to the problem is that, similar to a lot of versatile structures, if you move one part, you’re most likely impacting whatever else.

Recently, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Stanford University let robotics take their hand at having fun with the modeling substance, however not for fond memories’s sake. Their brand-new system called “RoboCraft” finds out straight from visual inputs to let a robotic with a two-fingered gripper see, mimic, and shape doughy items. It might dependably prepare a robotic’s habits to pinch and launch play dough to make different letters, consisting of ones it had actually never ever seen. In truth, with simply 10 minutes of information, the two-finger gripper matched human equivalents that teleoperated the maker– carrying out on-par, and sometimes even much better, on the evaluated jobs.

“Modeling and manipulating objects with high degrees of freedom are essential capabilities for robots to learn how to enable complex industrial and household interaction tasks, like stuffing dumplings, rolling sushi, and making pottery,” states Yunzhu Li, CSAIL PhD trainee and author of a brand-new paper about RoboCraft. “While there’s been recent advances in manipulating clothes and ropes, we found that objects with high plasticity, like dough or plasticine — despite ubiquity in those household and industrial settings — was a largely underexplored territory. With RoboCraft, we learn the dynamics models directly from high-dimensional sensory data, which offers a promising data-driven avenue for us to perform effective planning.”

When dealing with undefined, smooth products, the entire structure needs to be considered prior to any type of effective and efficient modeling and preparation can be done. RoboCraft utilizes a chart neural network as the characteristics design and changes images into charts of small particles together with algorithms to supply more accurate forecasts about the product’s modification fit.

RoboCraft simply uses visual information rather of complex physics simulators, which scientists frequently utilize to design and comprehend the characteristics and force acting upon items. Three elements collaborate within the system to form soft product into, state, an “R,” for instance.

Perception– the very first part of the system– is everything about discovering to “see.” It uses video cameras to collect raw, visual sensing unit information from the environment, which are then developed into little clouds of particles to represent the shapes. This particle information is utilized by a graph-based neural network to find out to “simulate” the item’s characteristics, or how it moves. Armed with the training information from lots of pinches, algorithms then assist prepare the robotic’s habits so it finds out to “shape” a blob of dough. While the letters are a little careless, they’re absolutely representative.

Besides developing cutesy shapes, the group of scientists is (really) dealing with making dumplings from dough and a ready filling. It’s a lot to ask at the minute with just a two-finger gripper. A rolling pin, a stamp, and a mold would be extra tools needed by RoboCraft (much as a baker needs different tools to work successfully).

An even more in the future domain the researchers picture is utilizing RoboCraft for support with family jobs and tasks, which might be of specific aid to the senior or those with restricted movement. To achieve this, offered the lots of blockages that might happen, a far more adaptive representation of the dough or product would be required, along with an expedition into what class of designs may be ideal to record the underlying structural systems.

“RoboCraft essentially demonstrates that this predictive model can be learned in very data-efficient ways to plan motion. In the long run, we are thinking about using various tools to manipulate materials,” statesLi “If you think about dumpling or dough making, just one gripper wouldn’t be able to solve it. Helping the model understand and accomplish longer-horizon planning tasks, such as, how the dough will deform given the current tool, movements and actions, is a next step for future work.”

Li composed the paper together with Haochen Shi, Stanford master’s trainee; Huazhe Xu, Stanford postdoc; Zhiao Huang, PhD trainee at the University of California at San Diego; and Jiajun Wu, assistant teacher atStanford They will provide the research study at the Robotics: Science and Systems conference in New YorkCity The work remains in part supported by the Stanford Institute for Human-Centered AI (HAI), the Samsung Global Research Outreach (GRO) Program, the Toyota Research Institute (TRI), and Amazon, Autodesk, Salesforce, and Bosch.