Ballyhooed artificial-intelligence method called “deep learning” restores 70- year-old concept.

In the past 10 years, the best-performing artificial-intelligence systems– such as the speech recognizers on smart devices or Google’s newest automated translator– have actually arised from a method called “deep learning.”

Deep knowing remains in reality a brand-new name for a method to expert system called neural networks, which have actually been entering and out of style for more than 70 years. Neural networks were very first proposed in 1944 by Warren McCullough and Walter Pitts, 2 University of Chicago scientists who transferred to MIT in 1952 as establishing members of what’s in some cases called the very first cognitive science department.

Neural internet were a significant location of research study in both neuroscience and computer technology till 1969, when, according to computer technology tradition, they were exterminated by the MIT mathematicians Marvin Minsky and Seymour Papert, who a year later on would end up being co-directors of the brand-new MIT Artificial Intelligence Laboratory.

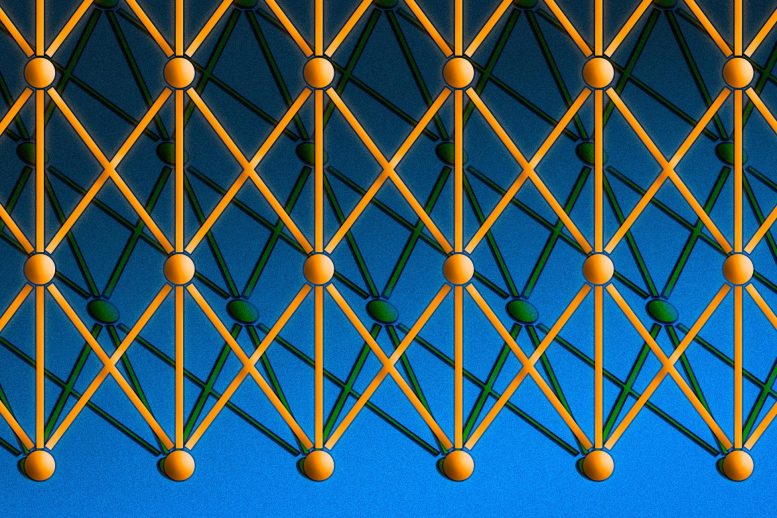

Most applications of deep knowing usage “convolutional” neural networks, in which the nodes of each layer are clustered, the clusters overlap, and each cluster feeds information to numerous nodes (orange and green) of the next layer. Credit: Jose-Luis Olivares/ MIT

The method then delighted in a revival in the 1980 s, fell under eclipse once again in the very first years of the brand-new century, and has actually returned like gangbusters in the 2nd, sustained mainly by the increased processing power of graphics chips.

“There’s this idea that ideas in science are a bit like epidemics of viruses,” states Tomaso Poggio, the Eugene McDermott Professor of Brain and Cognitive Sciences at MIT, a detective at MIT’s McGovern Institute for Brain Research, and director of MIT’s Center for Brains, Minds, andMachines “There are apparently five or six basic strains of flu viruses, and apparently each one comes back with a period of around 25 years. People get infected, and they develop an immune response, and so they don’t get infected for the next 25 years. And then there is a new generation that is ready to be infected by the same strain of virus. In science, people fall in love with an idea, get excited about it, hammer it to death, and then get immunized — they get tired of it. So ideas should have the same kind of periodicity!”

Weighty matters

Neural internet are a way of doing artificial intelligence, in which a computer system discovers to carry out some job by evaluating training examples. Usually, the examples have actually been hand-labeled beforehand. An things acknowledgment system, for example, may be fed countless identified pictures of cars and trucks, homes, coffee cups, and so on, and it would discover visual patterns in the images that regularly associate with specific labels.

Modeled loosely on the human brain, a neural internet includes thousands or perhaps countless easy processing nodes that are largely adjoined. Most these days’s neural internet are arranged into layers of nodes, and they’re “feed-forward,” significance that information relocations through them in just one instructions. An specific node may be linked to a number of nodes in the layer below it, from which it gets information, and a number of nodes in the layer above it, to which it sends out information.

To each of its inbound connections, a node will designate a number called a “weight.” When the network is active, the node gets a various information product– a various number– over each of its connections and multiplies it by the associated weight. It then includes the resulting items together, yielding a single number. If that number is listed below a limit worth, the node passes no information to the next layer. If the number goes beyond the limit worth, the node “fires,” which in today’s neural internet normally suggests sending out the number– the amount of the weighted inputs– along all its outbound connections.

When a neural internet is being trained, all of its weights and limits are at first set to random worths. Training information is fed down layer– the input layer– and it goes through the prospering layers, getting increased and totaled in complicated methods, till it lastly shows up, significantly changed, at the output layer. During training, the weights and limits are constantly changed till training information with the exact same labels regularly yield comparable outputs.

Minds and makers

The neural internet explained by McCullough and Pitts in 1944 had limits and weights, however they weren’t set up into layers, and the scientists didn’t define any training system. What McCullough and Pitts revealed was that a neural internet could, in concept, calculate any function that a digital computer system could. The result was more neuroscience than computer technology: The point was to recommend that the human brain might be considered a computing gadget.

Neural internet continue to be an important tool for neuroscientific research study. For circumstances, specific network designs or guidelines for changing weights and limits have actually recreated observed functions of human neuroanatomy and cognition, a sign that they catch something about how the brain processes info.

The very first trainable neural network, the Perceptron, was shown by the Cornell University psychologist Frank Rosenblatt in1957 The Perceptron’s style was just like that of the contemporary neural internet, other than that it had just one layer with adjustable weights and limits, sandwiched in between input and output layers.

Perceptrons were an active location of research study in both psychology and the recently established discipline of computer technology till 1959, when Minsky and Papert released a book entitled “Perceptrons,” which showed that performing particular relatively typical calculations on Perceptrons would be impractically time consuming.

“Of course, all of these limitations kind of disappear if you take machinery that is a little more complicated — like, two layers,” Poggio states. But at the time, the book had a chilling impact on neural-net research study.

“You have to put these things in historical context,” Poggio states. “They were arguing for programming — for languages like Lisp. Not many years before, people were still using analog computers. It was not clear at all at the time that programming was the way to go. I think they went a little bit overboard, but as usual, it’s not black and white. If you think of this as this competition between analog computing and digital computing, they fought for what at the time was the right thing.”

Periodicity

By the 1980 s, nevertheless, scientists had actually established algorithms for customizing neural internet’ weights and limits that were effective enough for networks with more than one layer, eliminating a lot of the restrictions recognized by Minsky andPapert The field delighted in a renaissance.

But intellectually, there’s something unfulfilling about neural internet. Enough training may modify a network’s settings to the point that it can usefully categorize information, however what do those settings suggest? What image functions is an item recognizer taking a look at, and how does it piece them together into the distinct visual signatures of cars and trucks, homes, and coffee cups? Looking at the weights of specific connections will not address that concern.

In current years, computer system researchers have actually started to come up with innovative approaches for deducing the analytic methods embraced by neural internet. But in the 1980 s, the networks’ methods were indecipherable. So around the millenium, neural networks were supplanted by assistance vector makers, an alternative technique to artificial intelligence that’s based upon some spick-and-span and stylish mathematics.

The current renewal in neural networks– the deep-learning transformation– comes thanks to the computer-game market. The complex images and fast speed these days’s computer game need hardware that can maintain, and the outcome has actually been the graphics processing system (GPU), which loads countless reasonably easy processing cores on a single chip. It didn’t take wish for scientists to understand that the architecture of a GPU is incredibly like that of a neural internet.

Modern GPUs made it possible for the one-layer networks of the 1960 s and the 2- to three-layer networks of the 1980 s to bloom into the 10-, 15-, even 50- layer networks these days. That’s what the “deep” in “deep learning” describes– the depth of the network’s layers. And presently, deep knowing is accountable for the best-performing systems in nearly every location of artificial-intelligence research study.

Under the hood

The networks’ opacity is still upsetting to theorists, however there’s headway on that front, too. In addition to directing the Center for Brains, Minds, and Machines (CBMM), Poggio leads the center’s research study program in Theoretical Frameworks forIntelligence Recently, Poggio and his CBMM associates have actually launched a three-part theoretical research study of neural networks.

The very first part, which was released in the International Journal of Automation and Computing, attends to the variety of calculations that deep-learning networks can carry out and when deep networks provide benefits over shallower ones. Parts 2 and 3, which have actually been launched as CBMM technical reports, attend to the issues of worldwide optimization, or ensuring that a network has actually discovered the settings that finest accord with its training information, and overfitting, or cases in which the network ends up being so attuned to the specifics of its training information that it stops working to generalize to other circumstances of the exact same classifications.

There are still lots of theoretical concerns to be addressed, however CBMM scientists’ work might assist make sure that neural networks lastly break the generational cycle that has actually brought them in and out of favor for 7 years.