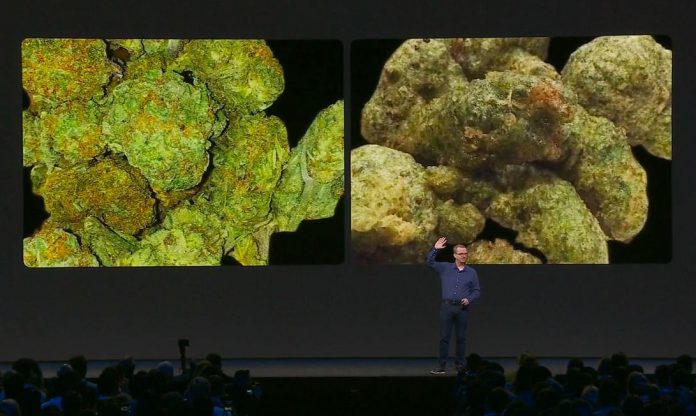

Facebook CTO Mike Schroepfer states Facebook’s AI can compare pictures of cannabis (left) and broccoli tempura (right).

Screenshot by Stephen Shankland/CNET

Facebook utilizes both people and expert system to fight a few of its most difficult issues, consisting of hate speech, false information and election meddling. Now, the social media is doubling down on AI.

The tech giant has actually come under fire for a series of lapses, including its failure to take down a live video of terrorist attack in New Zealand that eliminated 50 individuals at 2 mosques. Content mediators who evaluate posts shared by the social media’s 2.3 billion users state they have actually suffered injury from consistently taking a look at gruesome and violent material. But AI has actually likewise assisted Facebook flag spam, phony accounts, nudity and other offending material prior to a user reports it to the social media. Overall, AI has actually had actually blended outcomes.

Facebook CTO Mike Schroepfer on Wednesday acknowledged that AI hasn’t been a cure-all for the social media’s “complex problems,” however he stated the business was making development. He made the remarks in a keynote at the business’s F8 designer conference.

Schroepfer revealed the audience pictures of cannabis and broccoli tempura, which look remarkably comparable. Facebook staff members, he stated, constructed a brand-new algorithm that can find distinctions in comparable images, enabling a computer system to identify which was which.

Read more: CBD: What it is, how it impacts the body and who it may assist

Schroepfer stated comparable strategies can be utilized to assist devices acknowledge other images that may otherwise get away the social media’s detection.

“If someone reports something like this,” he stated, “we can then fan out and look at billions of images in a very short period of time and find things that look similar.”

Facebook, which does not enable the sale of leisure drugs on its platform, found that individuals attempted to work around its system by utilizing product packaging or baked products, such as Rice Krispies deals with. The social media can now flag those images by assembling signals like the text in a post, remarks and the identity of the user.

“This is an intensely adversarial game,” Schroepfer stated. “We build a new technique, we deploy it, people work hard to try to figure out ways around this.”

Identifying the best images isn’t the only AI obstacle the business is dealing with. When the business was constructing a wise video camera for its Portal video chat gadget, Facebook needed to ensure the innovation wasn’t prejudiced and might acknowledge age, gender and complexion.

Facebook is likewise attempting to train its computer systems to discover with less guidance in order to take on dislike speech in elections.

But as the social media utilizes AI to moderate more material, it likewise needs to stabilize issues that it’s being reasonable to all groups. Facebook, for instance, has actually been implicated of reducing conservative speech, however the business has actually rejected those claims. And individuals may disagree about what’s thought about hate speech or false information.

Facebook information researcher Isabel Kloumann stated in an interview that when the business is identifying what is hate speech the identity of the individual might be a crucial aspect together with who they’re targeting. At the exact same time, Facebook needs to stabilize security worry about whether they’re dealing with groups of individuals similarly.

“We don’t have a silver bullet for this,” she stated. “But the fact that we’re having this conversation is the most important thing.”

Originally released May 1, 1: 46 p.m. PT

Update, 5: 19 p.m.: Adds remarks from Facebook information researcher and more background.