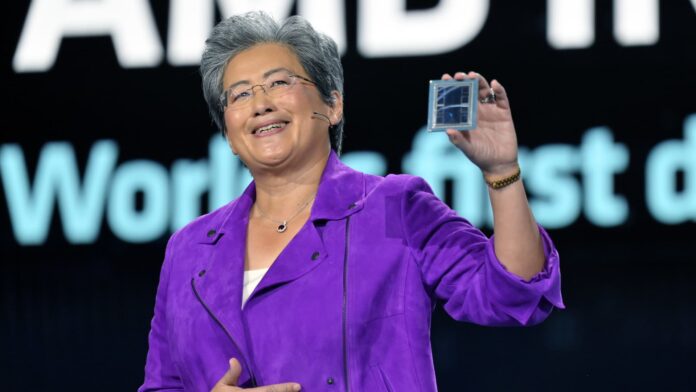

Lisa Su shows an AMD Instinct MI300 chip as she provides a keynote address at CES 2023 in Las Vegas, Nevada, onJan 4, 2023.

David Becker|Getty Images

Meta, OpenAI, and Microsoft stated at an AMD financier occasion Wednesday they will utilize AMD’s most recent AI chip, the Instinct MI300 X. It’s the most significant indication up until now that innovation business are looking for options to the costly Nvidia graphics processors that have actually been vital for producing and releasing expert system programs such as OpenAI’s ChatGPT.

If AMD’s most current high-end chip is great enough for the innovation business and cloud company constructing and serving AI designs when it begins shipping early next year, it might reduce expenses for establishing AI designs and put competitive pressure on Nvidia’s rising AI chip sales development.

“All of the interest is in big iron and big GPUs for the cloud,” AMD CEO Lisa Su stated Wednesday.

AMD states the MI300 X is based upon a brand-new architecture, which frequently causes considerable efficiency gains. Its most distinguishing characteristic is that it has 192 GB of a cutting-edge, high-performance kind of memory referred to as HBM3, which moves information much faster and can fit bigger AI designs.

Su straight compared the MI300 X and the systems constructed with it to Nvidia’s primary AI GPU, the H100

“What this performance does is it just directly translates into a better user experience,” Su stated. “When you ask a model something, you’d like it to come back faster, especially as responses get more complicated.”

The primary concern dealing with AMD is whether business that have actually been constructing on Nvidia will invest the time and cash to include another GPU provider. “It takes work to adopt AMD,” Su stated.

AMD on Wednesday informed financiers and partners that it had actually enhanced its software application suite called ROCm to take on Nvidia’s market basic CUDA software application, dealing with an essential imperfection that had actually been among the main factors AI designers presently choose Nvidia.

Price will likewise be very important. AMD didn’t expose rates for the MI300 X on Wednesday, however Nvidia’s can cost around $40,000 for one chip, and Su informed press reporters that AMD’s chip would need to cost less to acquire and run than Nvidia’s in order to encourage clients to purchase it.

Who states they’ll utilize the MI300 X?

AMD MI300 X accelerator for expert system.

On Wednesday, AMD stated it had actually currently registered a few of the business most starving for GPUs to utilize the chip. Meta and Microsoft were the 2 biggest buyers of Nvidia H100 GPUs in 2023, according to a current report from research study company Omidia.

Meta stated it will utilize MI300 X GPUs for AI reasoning work such as processing AI sticker labels, image modifying, and running its assistant.

Microsoft’s CTO, Kevin Scott, stated the business would use access to MI300 X chips through its Azure web service.

Oracle‘s cloud will likewise utilize the chips.

OpenAI stated it would support AMD GPUs in among its software, called Triton, which isn’t a huge large language design like GPT however is utilized in AI research study to gain access to chip functions.

AMD isn’t anticipating huge sales for the chip yet, just predicting about $2 billion in overall information center GPU earnings in2024 Nvidia reported more than $14 billion in information center sales in the most current quarter alone, although that metric consists of chips aside from GPUs.

However, AMD states the overall market for AI GPUs might reach $400 billion over the next 4 years, doubling the business’s previous forecast. This demonstrates how high expectations are and how sought after high-end AI chips have actually ended up being– and why the business is now focusing financier attention on the line of product.

Su likewise recommended to press reporters that AMD does not believe that it requires to beat Nvidia to do well in the market.

“I think it’s clear to say that Nvidia has to be the vast majority of that right now,” Su informed press reporters, describing the AI chip market. “We believe it could be $400 billion-plus in 2027. And we could get a nice piece of that.”