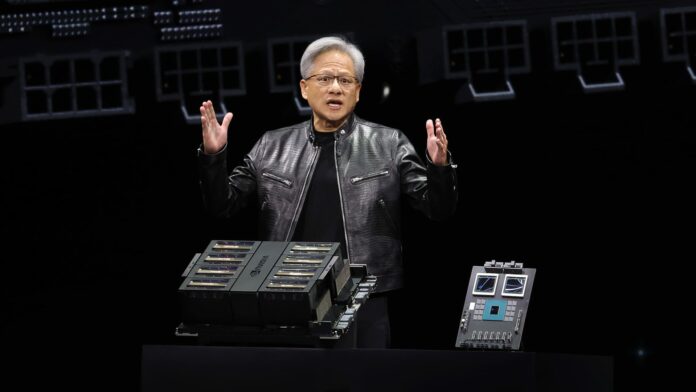

Nvidia CEO Jensen Huang provides a keynote address throughout the Nvidia GTC Artificial Intelligence Conference at SAP Center on March 18, 2024 in San Jose,California

Justin Sullivan|Getty Images

Nvidia on Monday revealed a brand-new generation of expert system chips and software application for running expert system designs. The statement, made throughout Nvidia’s designer’s conference in San Jose, comes as the chipmaker looks for to strengthen its position as the go-to provider for AI business.

Nvidia’s share cost is up five-fold and overall sales have more than tripled considering that OpenAI’s ChatGPT began the AI boom in late2022 Nvidia’s high-end server GPUs are vital for training and releasing big AI designs. Companies like Microsoft and Meta have actually invested billions of dollars purchasing the chips.

The brand-new generation of AI graphics processors is calledBlackwell The very first Blackwell chip is called the GB200 and will deliver later on this year. Nvidia is luring its clients with more effective chips to stimulate brand-new orders. Companies and software application makers, for instance, are still rushing to get their hands on the existing generation of “Hopper” H100 s and comparable chips.

“Hopper is fantastic, but we need bigger GPUs,” Nvidia CEO Jensen Huang stated on Monday at the business’s designer conference inCalifornia

Nvidia shares fell more than 1% in prolonged trading onMonday

The business likewise presented revenue-generating software application called NIM that will make it simpler to release AI, providing clients another factor to stick to Nvidia chips over an increasing field of rivals.

Nvidia executives state that the business is ending up being less of a mercenary chip supplier and more of a platform supplier, like Microsoft or Apple, on which other business can develop software application.

“Blackwell’s not a chip, it’s the name of a platform,” Huang stated.

“The sellable commercial product was the GPU and the software was all to help people use the GPU in different ways,” stated Nvidia business VP Manuvir Das in an interview. “Of course, we still do that. But what’s really changed is, we really have a commercial software business now.”

Das stated Nvidia’s brand-new software application will make it simpler to run programs on any of Nvidia’s GPUs, even older ones that may be much better matched for releasing however not constructing AI.

“If you’re a developer, you’ve got an interesting model you want people to adopt, if you put it in a NIM, we’ll make sure that it’s runnable on all our GPUs, so you reach a lot of people,” Das stated.

Meet Blackwell, the follower to Hopper

Nvidia’s GB200 Grace Blackwell Superchip, with 2 B200 graphics processors and one Arm- based central processing unit.

Every 2 years Nvidia updates its GPU architecture, opening a huge dive in efficiency. Many of the AI designs launched over the previous year were trained on the business’s Hopper architecture– utilized by chips such as the H100– which was revealed in 2022.

Nvidia states Blackwell- based processors, like the GB200, provide a substantial efficiency upgrade for AI business, with 20 petaflops in AI efficiency versus 4 petaflops for the H100 The extra processing power will allow AI business to train larger and more complex designs, Nvidia stated.

The chip includes what Nvidia calls a “transformer engine particularly developed to run transformers-based AI, among the core innovations underpinning ChatGPT.

The Blackwell GPU is big and integrates 2 independently produced passes away into one chip produced by TSMC It will likewise be readily available as a whole server called the GB200 NVLink 2, integrating 72 Blackwell GPUs and other Nvidia parts developed to train AI designs.

Nvidia CEO Jensen Huang compares the size of the brand-new “Blackwell” chip versus the existing “Hopper” H100 chip at the business’s designer conference, in San Jose, California.

Nvidia

Amazon, Google, Microsoft, and Oracle will offer access to the GB200 through cloud services. The GB200 sets 2 B200 Blackwell GPUs with one Arm- based Grace CPU. Nvidia stated Amazon Web Services would develop a server cluster with 20,000 GB200 chips.

Nvidia stated that the system can release a 27- trillion-parameter design. That’s much bigger than even the greatest designs, such as GPT-4, which supposedly has 1.7 trillion criteria. Many expert system scientists think larger designs with more criteria and information might open brand-new abilities.

Nvidia didn’t supply an expense for the brand-new GB200 or the systems it’s utilized in. Nvidia’s Hopper- based H100 expenses in between $25,000 and $40,000 per chip, with entire systems that cost as much as $200,000, according to expert price quotes.

Nvidia will likewise offer B200 graphics processors as part of a total system that uses up a whole server rack.

Nvidia reasoning microservice

Nvidia likewise revealed it’s including a brand-new item called NIM, which represents Nvidia Inference Microservice, to its Nvidia business software application membership.

NIM makes it simpler to utilize older Nvidia GPUs for reasoning, or the procedure of running AI software application, and will permit business to continue to utilize the numerous countless Nvidia GPUs they currently own. Inference needs less computational power than the preliminary training of a brand-new AI design. NIM makes it possible for business that wish to run their own AI designs, rather of purchasing access to AI results as a service from business like OpenAI.

The technique is to get clients who purchase Nvidia- based servers to register for Nvidia business, which costs $4,500 per GPU each year for a license.

Nvidia will deal with AI business like Microsoft or Hugging Face to guarantee their AI designs are tuned to operate on all suitable Nvidia chips. Then, utilizing a NIM, designers can effectively run the design on their own servers or cloud-based Nvidia servers without a prolonged setup procedure.

“In my code, where I was calling into OpenAI, I will change one line of code to point it to this NIM that I received from Nvidia rather,” Das stated.

Nvidia states the software application will likewise assist AI operate on GPU-equipped laptop computers, rather of on servers in the cloud.